Comprehensive Network Security at Splunk

Security from Layer 3 to Layer 7 with Istio and more.

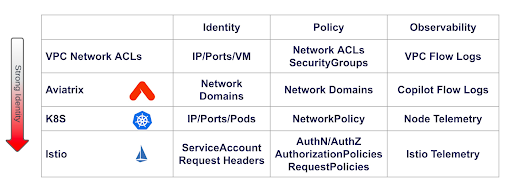

With dozens of tools for securing your network available, it is easy to find tutorials and demonstrations illustrating how these individual tools make your network more secure by adding identity, policy, and observability to your traffic. What is often less clear is how these tools interoperate to provide comprehensive security for your network in production. How many tools do you need? When is your network secure enough?

This post will explore the tools and practices leveraged by Splunk to secure their Kubernetes network infrastructure, starting with VPC design and connectivity and going all the way up the stack to HTTP Request based security. Along the way, we’ll see what it takes to provide comprehensive network security for your cloud native stack, how these tools interoperate, and where some of them can improve. Splunk uses a variety of tools to secure their network, including:

- AWS Functionality

- Kubernetes

- Istio

- Envoy

- Aviatrix

About Splunk’s Use Case

Splunk is a technology company that provides a platform for collecting, analyzing and visualizing data generated by various sources. It is primarily used for searching, monitoring, and analyzing machine-generated big data through a web-style interface. Splunk Cloud is an initiative to move Splunk’s internal infrastructure to a cloud native architecture. Today Splunk Cloud consists of over 35 fully replicated clusters in AWS and GCP in regions around the world.

Securing Layer 3/4: AWS, Aviatrix and Kubernetes

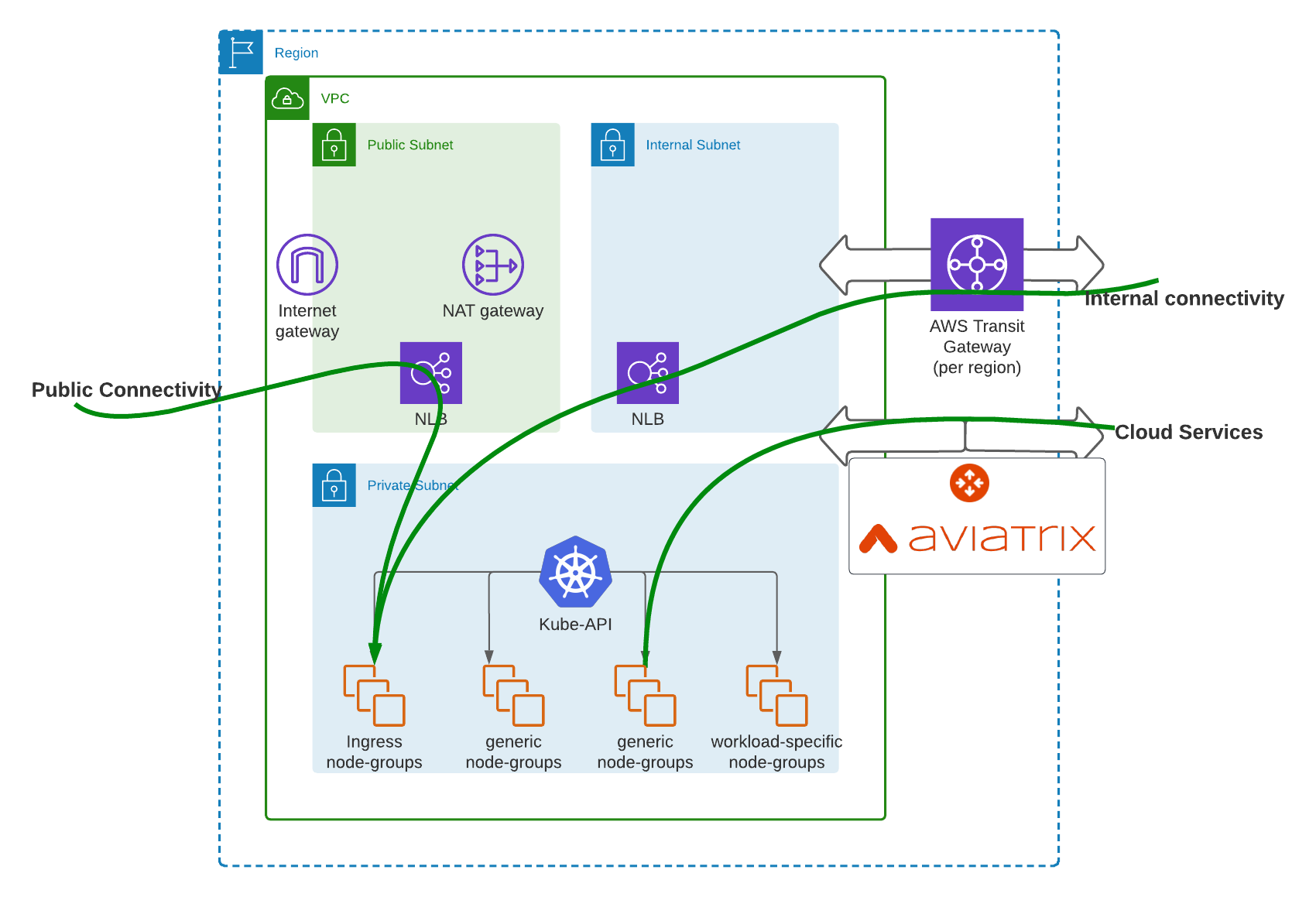

At Splunk Cloud, we use a pattern called “cookie cutter VPCs” where each cluster is provisioned with it’s own VPC, with identical private subnets for Pod and Node IPs, a public subnet for ingress and egress to and from the public internet, and an internal subnet for traffic between clusters. This keeps Pods and Nodes from separate clusters completely isolated, while allowing traffic outside the cluster to have particular rules enforced in the public and internal subnets. Additionally, this pattern avoids the possibility of RFC 1918 private IP exhaustion when leveraging many clusters.

Within each VPC, Network ACLs and Security Groups are set up to restrict connectivity to what is absolutely required. As an example, we restrict public connectivity to our Ingress nodes (that will deploy Envoy ingress gateways). In addition to ordinary east/west and north/south traffic, there are also shared services at Splunk that every cluster needs to access. Aviatrix is used to provide overlapping VPC access, while also enforcing some high level security rules (segmentation per domain).

The next security layer in Splunk’s stack is Kubernetes itself. Validating Webhooks are used to prevent the deployment of K8S objects that would allow insecure traffic in the cluster (typically around NLBs and services). Splunk also relies on NetworkPolicies for securing and restricting Pod to Pod connectivity.

Securing Layer 7: Istio

Splunk uses Istio to enforce policy on the application layer based on the details of each request. Istio also emits Telemetry data (metrics, logs, traces) that is useful for validating request-level security.

One of the key benefits of Istio’s injection of Envoy sidecars is that Istio can provide in-transit encryption for the entire mesh without requiring any modifications to the applications. The applications send plain text HTTP requests, but the Envoy sidecar intercepts the traffic and implements Mutual TLS encryption to protect against interception or modification.

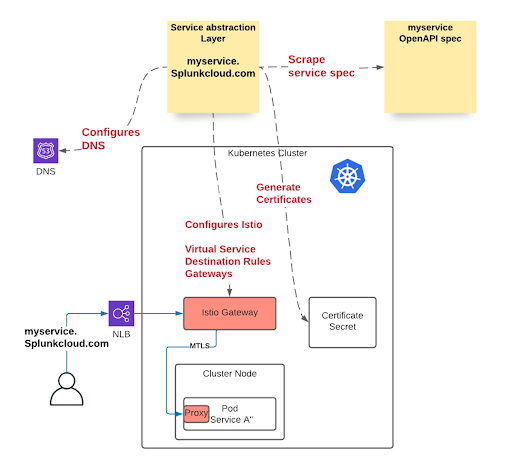

Istio manages Splunk’s ingress gateways, which receive traffic from public and internal NLBs. The gateways are managed by the platform team and run in the Istio Gateway namespace, allowing users to plug into them, but not modify them. The Gateway service is also provisioned with certificates to enforce TLS by default, and Validating Webhooks ensure that services can only connect to gateways for their own hostnames. Additionally, gateways enforce request authentication at ingress, before traffic is able to impact application pods.

Because Istio and related K8S objects are relatively complex to configure, Splunk created an abstraction layer, which is a controller that configures everything for the service, including virtual services, destination rules, gateways, certificates, and more. It sets up DNS that goes directly to the right NLB. It’s a one-click solution for end-to-end network deployment. For more complex use cases, the services teams can still bypass the abstraction and configure these settings directly.

Pain Points

While Splunk’s architecture meets many of our needs, there are a few pain points worth discussing. Istio operates by creating as many Envoy Sidecars as application pods, which is an inefficient use of resources. In addition, when a particular application has unique needs from its sidecar, such as additional CPU or Memory, it can be difficult to adjust these settings without adjusting them for all sidecars in the mesh. Istio Sidecar injection involves a lot of magic, using a mutating webhook to add a sidecar container to every pod as it is created, which means those pods no longer match their corresponding deployments. Additionally, injection can only happen at pod creation time, which means that any time a sidecar version or parameter is updated, all pods must be restarted before they will get the new settings. Overall, this magic complicates running a service mesh in production, and adds a great deal of operational uncertainty to your application.

The Istio project is aware of these limitations, and believes they will be substantially improved by the new Ambient mode for Istio. In this mode, Layer 4 constructs like identity and encryption will be applied by a Daemon running on the node, but not in the same pod as the application. Layer 7 features will still be handled by Envoy, but Envoy will be run in an adjacent pod as part of its own deployment, rather than relying on the magic of sidecar injection. Application pods will not be modified in any way in ambient mode, which should add a good deal of predictability to service mesh operations. Ambient mode is expected to reach Alpha quality in Istio 1.18.

Conclusion

With all these layers to network security at Splunk Cloud, it is helpful to take a step back and examine the life of a request as it traverses these layers. When a client sends a request, they first connect to the NLB, which will be allowed or blocked by the VPC ACLs. The NLB then proxies the request to one of the ingress nodes, which terminates TLS and inspects the request at Layer 7, choosing to allow or block the request. The Envoy Gateway then validates the request using ExtAuthZ to ensure it is properly authenticated, and meets quota restrictions before being allowed into the cluster. Next, the Envoy Gateway proxies the request upstream, and the network policies from Kubernetes take effect again to make sure this proxying is allowed. The upstream sidecar on the workload inspects the Layer 7 requests and if allowed, it will decrypt the request and send it to the workload in clear text.

Securing Splunk’s Cloud Native Network Stack while meeting the scalability needs of this large enterprise company requires careful security planning at each layer.

While applying identity, observability, and policy principles at every layer in the stack may appear redundant at first glance, each layer is able to make up for the shortcomings of the others, so that together these layers form a tight and effective barrier to unwanted access.

If you are interested in diving deeper into Splunk’s Network Security Stack, you can watch our Cloud Native SecurityCon presentation.